Download

Abstract

Scientific experimentation, a cornerstone of human progress, demands rigor in reliability, methodical control, and interpretability to yield meaningful results. Despite the growing capabilities of large language models (LLMs) in automating different aspects of the scientific process, automating rigorous experimentation remains a significant challenge. To address this gap, we propose Curie, an AI agent framework designed to embed rigor into the experimentation process through three key components: an intra-agent rigor module to enhance reliability, an inter-agent rigor module to maintain methodical control, and an experiment knowledge module to enhance interpretability. To evaluate Curie, we design a novel experimental benchmark composed of 46 questions across four computer science domains, derived from influential research papers, and widely adopted open-source projects. Compared to the strongest baseline tested, we achieve a 3.4× improvement in correctly answering experimental questions.

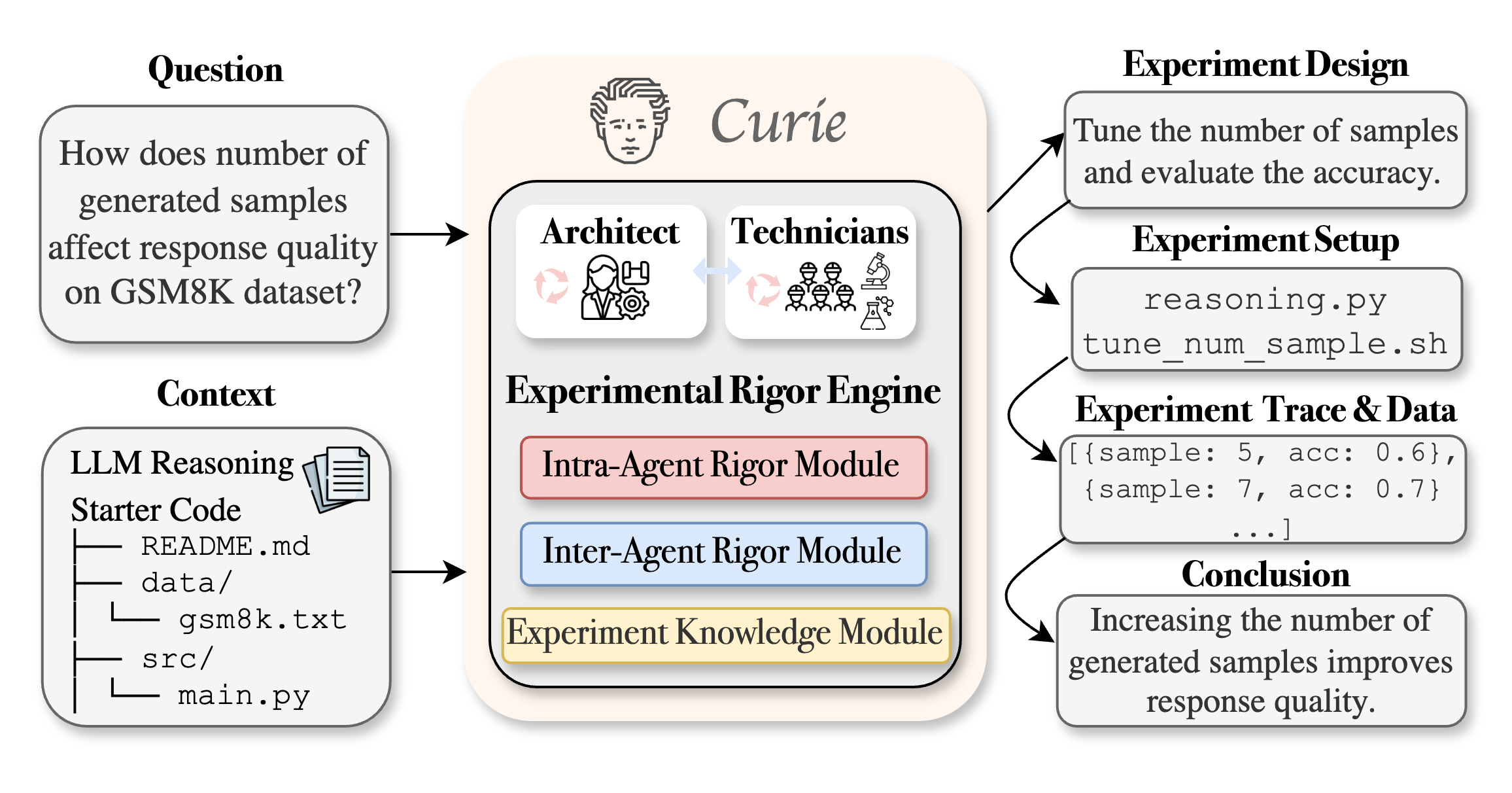

Figure 1: Curie high-level overview.

Curie takes an experimental question and relevant context (e.g., domain-specific knowledge or starter code) as input. The Architect Agent generates high-level experimental plans, coordinates the process, and reflects on findings to guide subsequent steps. Working in unison, our Technician Agents focus on carefully implementing and executing controlled experiments following these plans.

At the core of Curie, the Experimental Rigor Engine preserves agent creativity while embedding rigor seamlessly throughout the experimentation process. This is achieved via three key modules: (1) The Intra-Agent Rigor Module safeguards reliability within individual agents by enforcing a set of extensible rigor policies (e.g., validating that experiment plans align with objectives and setups are reproducible). (2) The Inter-Agent Rigor Module maintains methodical control over agent coordination, ensuring correct task transitions and efficient task scheduling. (3) Finally, the Experiment Knowledge Module enhances interpretability by maintaining well-structured documentation, enabling seamless collaboration in large-scale experiments.

Citation

Patrick Tser Jern Kon, Jiachen Liu, Qiuyi Ding, Yiming Qiu, Zhenning Yang, Yibo Huang, Jayanth Srinivasa, Myungjin Lee, Mosharaf Chowdhury and Ang Chen. “Curie: Toward Rigorous and Automated Scientific Experimentation with AI Agents.” Arxiv 2025.